The Information Technology (IT) environment at most organizations is one of constant innovation that brings in an influx of new information and data. Managing this, along with maintaining existing processes, requires a sound business strategy. Today, the Office of Information Technology (OIT) at the University of Texas at San Antonio is comprised of approximately 150 full-time employees. This staff consists of typical enterprise teams such as developers, server and network administrators, and other positions vital to the daily operations of an IT department. In the past, however, the culture of OIT was that of a traditional IT shop in that teams struggled to keep up with the day-to-day maintenance and operations.

The Information Technology (IT) environment at most organizations is one of constant innovation that brings in an influx of new information and data. Managing this, along with maintaining existing processes, requires a sound business strategy. Today, the Office of Information Technology (OIT) at the University of Texas at San Antonio is comprised of approximately 150 full-time employees. This staff consists of typical enterprise teams such as developers, server and network administrators, and other positions vital to the daily operations of an IT department. In the past, however, the culture of OIT was that of a traditional IT shop in that teams struggled to keep up with the day-to-day maintenance and operations.

Traditionally, maintaining existing services, upgrading existing applications, and implementing new business applications, along with other tasks, consumed the majority of our work, leaving employees no opportunity for creative development. Despite these many responsibilities, management still expected them to innovate and craft breakthrough ideas for technology. We realized this workload and structure was inefficient and perhaps even hindered innovative development. We needed a team dedicated to keeping UTSA at the forefront of technology while providing the university with a competitive, strategic advantage. As a result of our research, we discovered the concept of Bimodal IT and decided to implement it at UTSA.

Gartner defines Bimodal IT as “having two modes of IT, each designed to develop and deliver information and technology-intensive services in its own way.” Mode One is traditional, emphasizing safety and accuracy. Mode Two is non-sequential, emphasizing agility and speed. Simply put, Mode One means slow and cautious implementation, similar to that of marathon runners, while Mode Two is fast, similar to that of sprinters.

We needed a Mode Two team that was innovative and not impeded by daily maintenance and operational tasks. The original idea for the Mode Two team involved moving quickly toward implementing innovative solutions while keeping in mind that everything the team did may not prove successful. We adopted a “fail fast” strategy that freed us from spending months on a project only to discover that it was not going to work for one reason or another.

An essential practice with the Mode Two side provides a “proof of concept” that does not involve building projects to scale for the entire environment. Mode One team members implement and build the project to scale only after the “proof of concept” in Mode Two has proven successful. To move towards a successful implementation phase, it is crucial for the Mode Two team to provide thorough documentation for the Mode One group.

Forming the Two Modes

Ken Pierce, UTSA’s former Chief Information Officer (CIO) for IT and Vice Provost, fully supported the Bimodal IT concept, which helped pave the way for forming the new team. The first move was to begin assembling the new Mode Two team. OIT selected a director from the systems side of IT along with two technical staff members from the server and desktop support sides of the house. The staff members chosen for the team had a history of innovation within the organization. The director reported to the CIO, who was committed to the concept. With the newly formed team, the next step was to geographically separate the two teams by moving them into a separate building. This was done to ensure that the subject matter experts from the Mode Two team were not pulled back into daily operational tasks the Mode One team was handling.

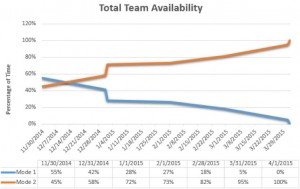

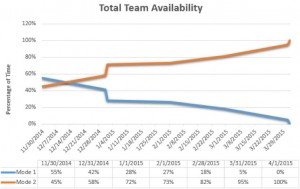

The current role and responsibilities of the director of the newly formed Mode Two team then transferred to an existing director on the Mode One side of the department. The two directors created and agreed upon a transition plan that listed all of the outstanding Mode One projects and tasks. A corresponding graph was developed to indicate a percentage of time that the new Mode Two staff would be working on Mode One and Mode Two projects. As demonstrated in the graph, there was a four-month transition plan, and the Mode Two staff were not completely dedicated to Mode Two work until April 1, 2015. This graph allowed executive management and stakeholders the opportunity to visualize the progress of the transition plan.

During the transitions, two professional developers were contracted and two part-time UTSA students were hired to act as assistant developers and provide help in other areas. We hired one student from the Electrical Engineering graduate program and another from the Computer Science program at UTSA. While neither student had years of development and systems architecture under his or her belt, both had experience developing in C++, Java, and other computer technologies. Since the goal was to find students who demonstrated the aptitude and desire to learn, these two students fit the team perfectly.

Our team was finally assembled with two systems professionals, two developers, and two part-time student workers. It was time to start putting our project list together and build out the planning and documentation processes.

From the very beginning of the team’s formation, we focused the projects on transparency. We built the project list into a SharePoint form that was open to all of campus so that the UTSA community could see what the Mode Two team was working on as well as enter requests for new projects. Anyone could enter a project request, but the team reserved the right to approve or disapprove anything from the list. The main criterion for the acceptance of a project request was that it had to provide a business and/or student benefit.

Instead of consuming large amounts of time building long, elaborate project charters and project plans, a process was created that resulted in a simple hypothesis form requiring three key pieces of information:

- Project summary and problem statement

- High-level requirements

- Business or student benefit

A traditional Mode One trait that does not go away is the process of thorough documentation from inception to completion. Since these projects were eventually handed back over to the Mode One teams, it was imperative that build documents, lessons learned, and other information were delivered to the new team tasked with implementing the project.

Key Lessons Learned

The Bimodal concept includes two separate and fully functioning groups. It is essential that management does not forget the Mode One team or allow the Mode Two group to become known as an “elite team.” Management should remember that the Mode One team requires their attention and support because they are, after all, managing the heart of the IT infrastructure.

The leadership style of the Mode Two team should be democratic and focus on collaboration. Everything should be designed to function within a collaborative environment.

It may take time for the Mode Two team to change their mindset and ways of doing things. Managers can coach and remind them to start thinking in the new mindset. It is important to have the full support of senior management, especially the CIO’s when managers begin working with the Mode One Team.

Both teams (Mode One and Mode Two) offer two different ways of approaching projects and solving problems. When both teams are used properly and to their full potential, the entire IT department can benefit and produce impressive results.

A Seattle company was recently broken into and a stash of old laptops was stolen. Just a typical everyday crime by typical everyday thieves. These laptops weren’t even being used by anyone in the company. The crime turned out to be anything but ordinary when those same thieves (cyber-criminals) used data from the laptops to obtain information and siphon money out of the company via fraudulent payroll transactions. On top of stealing money, they also managed to steal employee identities.

A Seattle company was recently broken into and a stash of old laptops was stolen. Just a typical everyday crime by typical everyday thieves. These laptops weren’t even being used by anyone in the company. The crime turned out to be anything but ordinary when those same thieves (cyber-criminals) used data from the laptops to obtain information and siphon money out of the company via fraudulent payroll transactions. On top of stealing money, they also managed to steal employee identities.

The Information Technology (IT) environment at most organizations is one of constant innovation that brings in an influx of new information and data. Managing this, along with maintaining existing processes, requires a sound business strategy. Today, the Office of Information Technology (OIT) at the University of Texas at San Antonio is comprised of approximately 150 full-time employees. This staff consists of typical enterprise teams such as developers, server and network administrators, and other positions vital to the daily operations of an IT department. In the past, however, the culture of OIT was that of a traditional IT shop in that teams struggled to keep up with the day-to-day maintenance and operations.

The Information Technology (IT) environment at most organizations is one of constant innovation that brings in an influx of new information and data. Managing this, along with maintaining existing processes, requires a sound business strategy. Today, the Office of Information Technology (OIT) at the University of Texas at San Antonio is comprised of approximately 150 full-time employees. This staff consists of typical enterprise teams such as developers, server and network administrators, and other positions vital to the daily operations of an IT department. In the past, however, the culture of OIT was that of a traditional IT shop in that teams struggled to keep up with the day-to-day maintenance and operations.

Imagine it’s 1955 and your next door neighbor is watching Gunsmoke on his fancy, new TV. But you’re not really into this newfangled gadget with its grainy moving pictures. So, you’re listening to your radio – The Lucky Strike Program with Jack Benny is on.

Imagine it’s 1955 and your next door neighbor is watching Gunsmoke on his fancy, new TV. But you’re not really into this newfangled gadget with its grainy moving pictures. So, you’re listening to your radio – The Lucky Strike Program with Jack Benny is on. Recently we had a technology group meeting regarding backup and disaster recovery. During the course of the presentation, the topic generated many points of discussion regarding the best ways to perform backups and what solutions seemed to work the best in the personal experience of those in attendance. Suffice it to say, the opinions were quite varied. This made me think: if there were that many different opinions on the topic among technology professionals, then it surely must be confusing for the everyday consumer or IT professional who has not had a lot of experience in this segment of the IT industry! So, I thought I’d try to shed some light on some of the types of solutions that are available and what users may expect from them.

Recently we had a technology group meeting regarding backup and disaster recovery. During the course of the presentation, the topic generated many points of discussion regarding the best ways to perform backups and what solutions seemed to work the best in the personal experience of those in attendance. Suffice it to say, the opinions were quite varied. This made me think: if there were that many different opinions on the topic among technology professionals, then it surely must be confusing for the everyday consumer or IT professional who has not had a lot of experience in this segment of the IT industry! So, I thought I’d try to shed some light on some of the types of solutions that are available and what users may expect from them.

On Thursday, April 9th, I attended the 2015 San Antonio North Chamber CIO Panel, presented in conjunction with InnoTech. The sellout event at the San Antonio Convention Center was attended by over 300 representatives from San Antonio’s leading business and government organizations. After opening remarks by Gary Britton, New Horizons Learning Centers and San Antonio North Chamber Technology Chair, the session turned to recognizing the winners of the annual and lifetime technology leadership awards. Bill Phillips, Senior VP/CIO of the University Health System received the IT Executive of the Year. His accomplishments included leadership in major facilities and technology upgrades. David Monroe was given the first Lifetime Achievement Award for his many accomplishments starting with his leading role at Datapoint, San Antonio’s first breakout technology company.

On Thursday, April 9th, I attended the 2015 San Antonio North Chamber CIO Panel, presented in conjunction with InnoTech. The sellout event at the San Antonio Convention Center was attended by over 300 representatives from San Antonio’s leading business and government organizations. After opening remarks by Gary Britton, New Horizons Learning Centers and San Antonio North Chamber Technology Chair, the session turned to recognizing the winners of the annual and lifetime technology leadership awards. Bill Phillips, Senior VP/CIO of the University Health System received the IT Executive of the Year. His accomplishments included leadership in major facilities and technology upgrades. David Monroe was given the first Lifetime Achievement Award for his many accomplishments starting with his leading role at Datapoint, San Antonio’s first breakout technology company.